What are deadlocks

"Deadlock" is a word we hear quite a lot as programmers, but what are they? How are they classified and how to avoid them? In this post, I would try to summarise some research over the last 50 years and provide a clear view of the problem and possible solutions.

Deadlock or deadly embrace, as Prof. Dijkstra called them (If the name ring the bell— Dijkstra's graph algorithm is the references the same Dijkstra) is a situation of endless waiting, in which a group of processes cannot continue their work until "external forces" perform certain actions. The word "process" here has nothing to do with processes in the operating system. The process in the deadlock is an automaton with a set of states. Such states can be "process A does not own resources" or "process B owns an alpha resource and is trying to get a beta resource". The definition is not quite correct in 100% of situations, but it is suitable for discussing most approaches.

To begin with, let's consider the simplest case: a deadlock on one machine caused by competitive access to a resource from several processes. A resource can be either a synchronization primitive, such as a mutex, or a device, such as a printer.

Such a Deadlock is called either a resource or interleaved deadlock. The first is a more familiar and popular name, the second I've found only in [4]. For a deadlock to occur, 3 conditions must be met:

- Exclusive access. Only one process can capture a resource at a time.

- No preemption. The process that has captured the resource cannot be interrupted.

- Hold and wait condition. A situation where the process first captured one resource and then waits for a second must be possible. Without releasing the first resource of course.

The presence of these conditions in the system does not guarantee the occurrence of a deadlock! These are only necessary conditions. Their presence means the existence of an unsafe region[5]. Consider again that processes may have some state that is they could capture some resources. If processes can capture resources in a way that none other processes could continue — this set of processes' states is named an unsafe region.

If there is an unsafe region exists, it is sufficient to find only one set of states ending up in an unsafe region for a deadlock to occur. This is possible with one last condition:

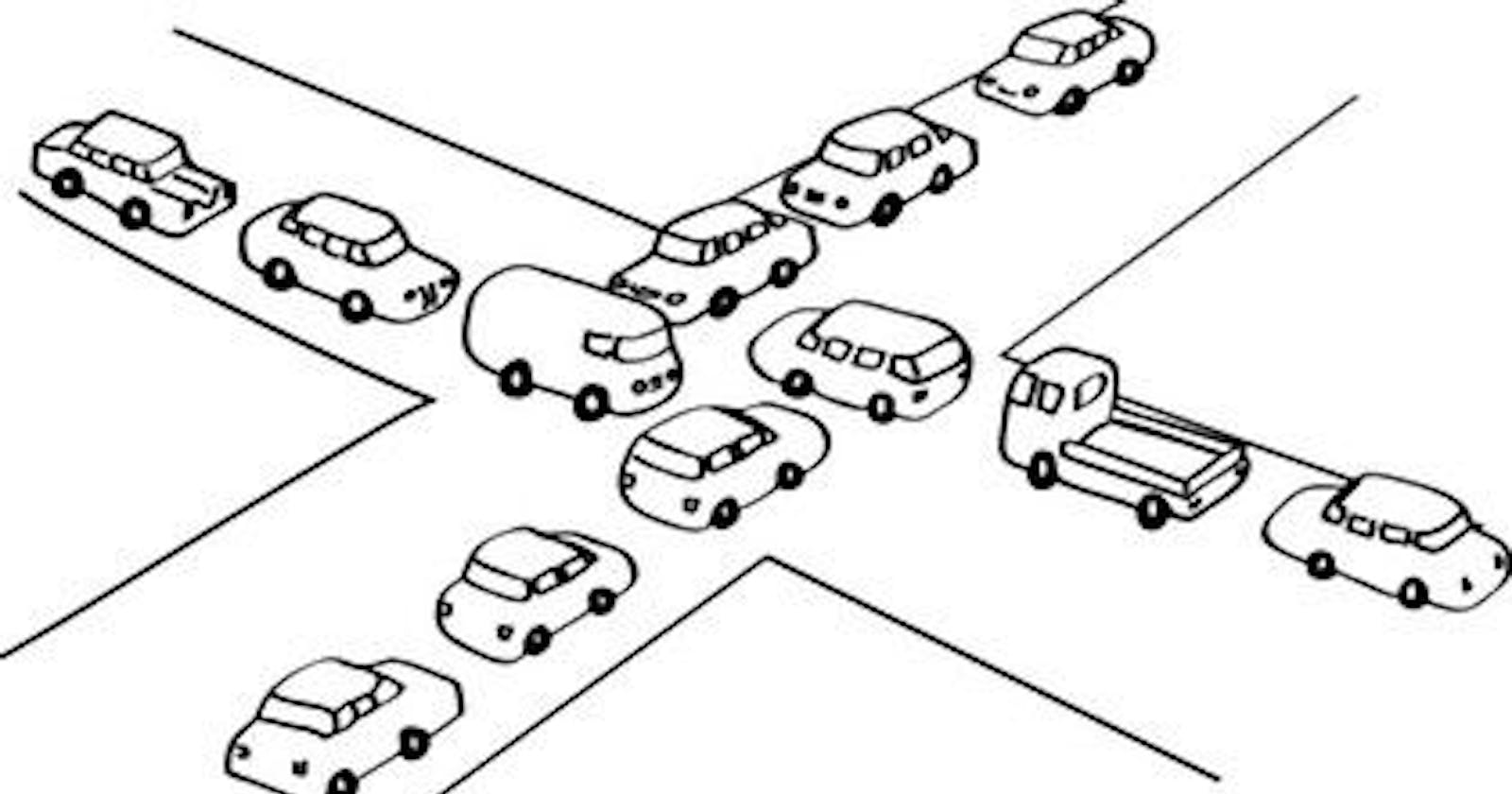

Circular wait condition. Processes and resources should be able to be assembled into a "chain". For example Process A owns resource a while it wants to capture resource b, which is owned by process B, which wants to take over resource a. If we denote these relations with arrows:

a -> A meaning process A owns resource a.

A -> b meaning process A wants to capture resource b.

We get a circle (well... a square):

a -> A

^ |

| v

B <- b

The insights

The first insight: deadlocks do not arise just like that, they need 3 conditions: exclusive access, no-preemption, hold-and-wait + one sufficient condition: circular wait.

The second insight: to overcome the deadlock, it is enough to get rid of one of the conditions!

Point 2 is especially interesting and allows us to look at working with resources and familiar "programming tricks" with different eyes. For example: why are locks often divided into read-only and write-only locks? For performance, of course. But also because a deadlock cannot occur in a read-only lock, since it is not exclusive (the first condition)!

Or recently I accidentally made a non-concurrent index in Postgres on a table with a couple of hundred million records and everything went to a grinding halt. The solution to the problem is preemption! We manually cancel the transaction and the deadlock ceases to exist. And the masters of computer science knew this back in the 70s. Similar mechanisms can also be automated. Unfortunately, it's not so simple. On the one hand, detecting deadlocks is essentially a graph problem, where you just need to correctly walk along the edges from processes to resources. On the other hand, the graph is constantly changing literally as you walk through it. It seems there are no solutions to the problem in general, but in specific cases, for example, only in the database, there is something you can do and it even works in practice.

Things get interesting with the hold-and-wait condition too. For example, what if the process immediately requested all the resources it needed? Or at least it would capture them gradually, but if unsuccessful the process would release all those already captured. This approach, it turns out, also removes the problem of deadlocks. Unfortunately, not every process knows in advance neither all the resources it needs nor the order in which they will be captured. Again in the general case, it is difficult to solve the problem of deadlocks by knowing the resources required in advance, but in some cases, it is much easier. For example, we could assume that the process knows in advance how much memory and CPU a new virtual machine/container needs. Those are also limited resources. What if there are no more resources available? In this case, you don't usually end up with a deadlock. More likely, a process that can not get the required resources would be re-relocated to another machine. Still, by not allowing hold-and-wait conditions, you may make assigning machines to processes easier.

Finally, circular-wait. Let's make sure that all resources could only be captured in a certain order. For example, let's list all the resources in the system from 1 to n. And add a condition: if a process has captured resource k where 1 <= k <= n, it can only capture any other resource with the number m, where m < k. In other words, the process first needs to capture the most "high-level" resource, and then all the lower resources. The presence of numbered resources and one condition for their capture also allows you to get rid of deadlocks. The full proof is in [5].

All these approaches can be broadly divided into 3 categories:

- Detection. Approaches that use the graph of resources and their dependencies to detect deadlocks. Preemption is the main weapon of deadlock resolution where they are found.

- Prevention. Goal: to design the system in such a way that deadlocks are impossible. For example number of resources and allowing capturing only "lower-level" resources. Or prohibit capturing a resource, and then waiting for the second one.

- Avoidance. Approaches in which the system moves only from one "safe" state to another. The decision to allow capturing a resource is made dynamically, for example during a request. The most popular algorithm: the banker's algorithm. Unfortunately, it requires that processes know what resources they need before starting the execution.

- The ostrich algorithm. Or do-nothing algorithm. A strategy in which a programmer is being assigned to an on-call and is given a "cancel" button.

Resources:

- E.G. Coffman, M. Elphick, A. Shoshani. System Deadlocks.

- Richard C. Holt. Some deadlock properties of computer systems.

- S.S. Isloor, T.A. Marsland. The Deadlock Problem: An Overview.

- Gertrude Neuman Levine. Defining deadlocks.

- Charles M. Shub. A Unified Treatment of Deadlock.